I use Team Foundation Server over the end of a VPN connection to from my house in the UK over to Teamprise HQ back in Champaign, IL. Using Team Foundation Server the performance has been satisfactory, but I've always been jealous of the performance that the guys in the office get over their 1Gbs connection. We have gigabit networks both sides of the Atlantic, but the ADSL line to my house is a 2Mbs downstream and 256kps upstream connection which slows everything down. As an experiment, I've just installed a very small machine as a TFS Version Control Proxy Server and the performance increase is astounding - I wish I had done this earlier.

I use Team Foundation Server over the end of a VPN connection to from my house in the UK over to Teamprise HQ back in Champaign, IL. Using Team Foundation Server the performance has been satisfactory, but I've always been jealous of the performance that the guys in the office get over their 1Gbs connection. We have gigabit networks both sides of the Atlantic, but the ADSL line to my house is a 2Mbs downstream and 256kps upstream connection which slows everything down. As an experiment, I've just installed a very small machine as a TFS Version Control Proxy Server and the performance increase is astounding - I wish I had done this earlier.

In my office, I had an old Dell Optiplex SX270 lying round. Measuring 240x240x85 mm (~9x9x3 inches), it used to run woodwardweb.com before Teamprise kindly offered to host my blog for me. It's not a powerhouse by any stretch of the imagination - a 2.4 Ghz P4 with 512Mb RAM and a 5,400rpm 20Gb Laptop hard disk. I picked it up on the Dell Outlet store for less than £300 over 3 years ago. However, it will run Windows Server 2003 without any complaints, so I configured the box as a VPN gateway for my network and also installed the Team Foundation Server Version Control Proxy onto it.

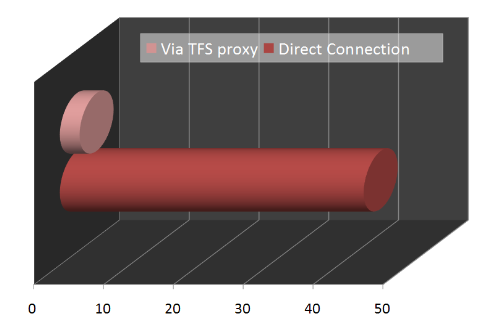

All I can say is that I wish I has done this earlier. Our main development branch currently contains 4,501 files in 706 folders taking up 70Mb. As you can tell - the majority of the files are small (java source) files. The TFS Version Control Proxy gives the most impressive speed improvements when working with large files. Despite this, to do a full clean get of the main development branch has gone from 43 minutes 33 seconds to (on average) 2 minutes 50 seconds - that is a 15 times performance improvement.

While, I don't see this sort of speed improvements all the time (because in the majority of cases I just do a Get Latest to update the 20 or so files changes since I last did a Get Latest into my workspace), it is invaluable when I swap branches or when I want to run a clean build. Doing some performance testing, even my pint sized server is not under any sort of load during a full get of the trunk so I could probably use it to host a small remote team of about 5-10 people reasonably easily.

Anyway - I just wanted to post about my small TFS proxy server (though not quite the smallest TFS appliance). If you are running TFS and you have a remote office connecting then I urge you to set aside a spare machine to run as a TFS proxy server at the remote site. It doesn't have to be particularly high-powered or dedicated to the task for you to get some serious benefit from it.

This makes no sense. If you are the only person using the proxy, the files on the proxy should always be the same as the ones in your workspace. Is there something you failed to mention? Is the proxy hit by others?

Chris,

As I mentioned, the performance improvements are most when doing a full clean get of files that I have (mostly) already got before. I do this more than most people as I often create a new workspace to do some work in and that requires me to re-get a branch of the source tree into my machine again.

Like you say, using the TFS Proxy for the "average" single remote user won't speed things up for them in day to day use becuase (as you point out) the majority of their calls will not be achieving as cache hit.

Funnily enough, I'm currently writing a service that lives on the TFS proxy that subscribes to the check-in event and pre-caches that version of the file at the remote proxy server. That will then help users on remote sites as it will increase the likelyhood of cache hits.

Cheers,

Martin.

A really simple, though less elegant approach, to pre-loading the cache is to have a workspace on some machine (maybe even the proxy itself) and run "tf get" in it every 15 minutes via the Windows task scheduler. To save space, you could delete the files in the workspace each time, since the server keeps track of the workspace "has."

I have something similar to what Buck described on one of our local proxies. I think it might even be set to locally delete everything under the workspace root, as he suggested (that box's drive isn't particularly huge).

Of course, where that falls short compared to your approach is the fact that it has to compute the get every time - yours should be able to avoid that - and it has to have the superset of everyone's mappings, which means that get can be VERY expensive.

The only downside of yours that I can think of is that if you *don't* want every file on the server, you'd have to filter the items from the events through the mappings you care about - somehow. But, you probably have the whole tree mapped *somewhere* amongst your various workspaces so I doubt that applies to you.

I think, somewhere between the two, lies a great power tool or product idea...